Data Preparation & Preprocessing

Data Preparation & PreprocessingK-Fold Cross-Validation

This exercise will explore one of the most common techniques for assessing and evaluating a machine learning model's performance, known as k-fold cross-validation.

Train-Test Split

Before we dive into k-fold cross-validation, it is important to review the concept of train-test split.

When training and evaluating a model in machine learning, it is often useful to randomly split our dataset into training and testing sets. The idea is to use the training set (typically about 80% of the dataset) for training our model and to use the remaining testing set for evaluating our model.

However, because the splitting process is random, the chosen training set may sometimes be a bad one and a lot of the good training data examples may be a part of the testing set, which prevents the model from learning well. To prevent this, we can use k-fold cross-validation.

Definition

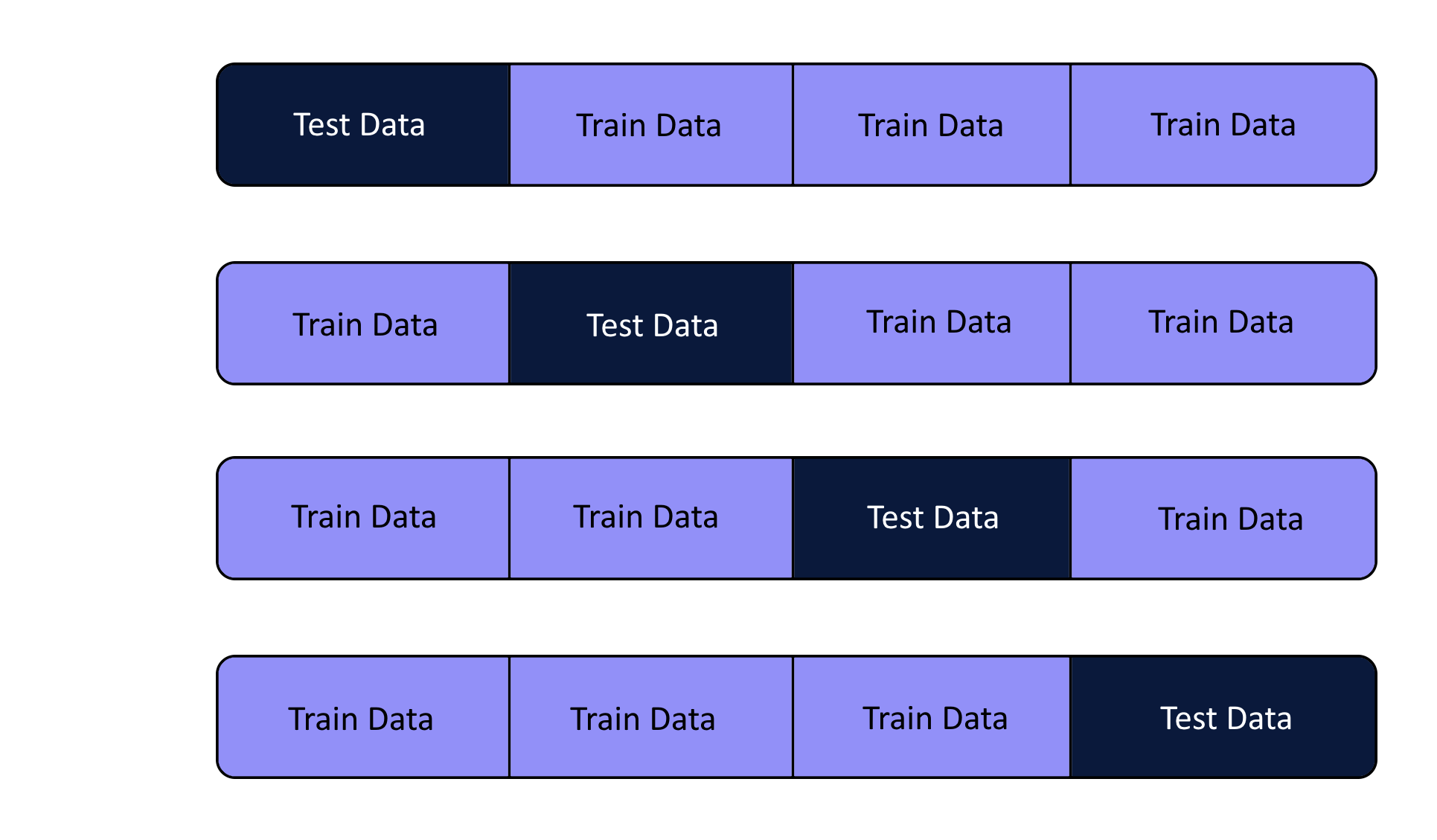

In k-fold cross-validation, we split the dataset into smaller sets, or "folds". Then, we train our model

times, each time using a different fold as the testing set, and the remaining folds as the training set. Finally, we collect the performances of the models across all

folds and use the model that performs the best on the testing set.

For example, if we use 4-fold cross-validation, we would split our dataset into 4 equal-sized folds and train the model 4 times. In each iteration, we would use a different fold as the testing set and the other 4 folds as the training set. This way, we can use all of the data for both training and testing without overfitting the model to any particular subset of the data.

Note: Technically, the testing set in each of the folds is really a validation set, and not a testing set. This is because we are using the performance of our model on this set to choose which model to select. This is different from the testing set, which is typically used to evaluate the final performance of the model after all training is completed and to report the final accuracy of the model. However, we will refer to this as the testing set throughout this exercise, just to avoid confusion.

Exercise

Write a Python iterator class, KFoldCrossValidation, where the constructor takes in a multi-dimensional array X and the number of folds k, and sets up the iterator to split the dataset into k folds. Splitting must occur at axis 0. Write the __next__() method that returns two arrays, train_data and test_data. These arrays must correspond to the training data and testing data of the current iteration respectively.

Sample Test Cases

Test Case 1

Input:

[

[[2, 3],

[1, 0]],

2

]

Output:

======

Fold 1

======

train_data = [[2, 3]]

test_data = [[1, 0]]

======

Fold 2

======

train_data = [[1, 0]]

test_data = [[2, 3]]

Note: The values listed for train_data and test_data represent one of many possible splits.

Test Case 2

Input:

[

[[10, 0],

[ 2, 11],

[ 1, 8],

[ 4, 6],

[ 5, 9],

[ 3, 7]],

3

]

Output:

======

Fold 1

======

train_data = [

[ 5, 9],

[ 2, 11],

[ 4, 6],

[10, 0]

]

test_data = [

[1, 8],

[3, 7]

]

======

Fold 2

======

train_data = [

[ 1, 8],

[ 3, 7],

[ 4, 6],

[10, 0]

]

test_data = [

[ 5, 9],

[ 2, 11]

]

======

Fold 3

======

train_data = [

[ 1, 8],

[ 3, 7],

[ 5, 9],

[ 2, 11]

]

test_data = [

[ 4, 6],

[10, 0]

]

Note: The values listed for train_data and test_data represent one of many possible splits.